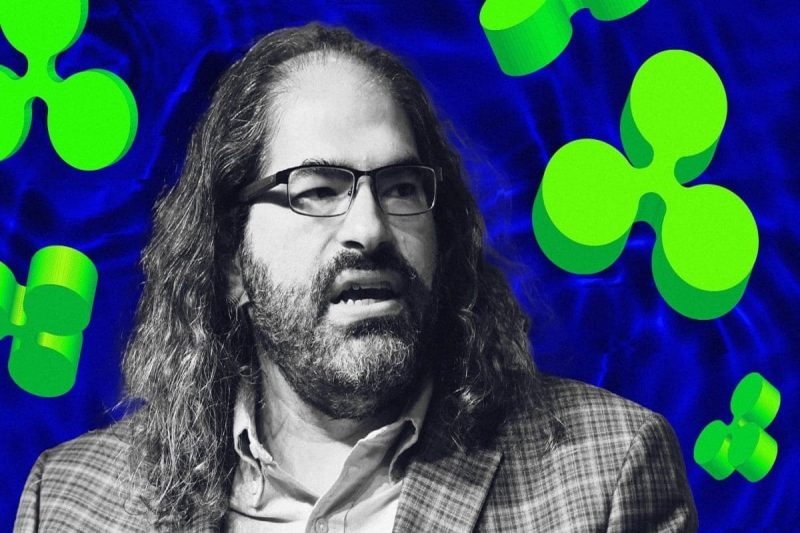

Ripple CTO David Schwartz has dismissed the legal case against Character.AI, asserting that it lacks merit under U.S. law. Schwartz expressed his views on social media, emphasizing that while he does not defend Character.AI’s moral responsibility, the legal arguments made against the company are flawed.

Ripple CTO David Schwartz’s Argument on Free Speech

In a post on X (formerly Twitter), Ripple CTO David Schwartz highlighted that Character.AI’s actions fall under the protection of the First Amendment. He argued that the company’s chatbot platform produces expressive content, which remains protected unless it falls into one of the narrowly defined categories of unprotected speech, such as incitement or direct threats.

According to Schwartz, the lawsuit’s complaint revolves around the idea that Character.AI was reckless in how it designed its platform to generate speech.

He stated, “Any argument that the expressive contents of protected speech are reckless, dangerous, or ‘defective’ is wholly incompatible with freedom of speech.”

Schwartz compared the situation to previous moral panics over new forms of media, suggesting that the legal challenge against Character.AI mirrors past controversies involving video games, comic books, and other expressive content. He emphasized that regulating how speech is chosen would conflict with constitutional rights.

The Character.AI Lawsuit and Its Claims

The lawsuit, filed by the mother of a 14-year-old boy named Sewell Setzer III, accuses Character.AI of negligence, wrongful death, deceptive trade practices, and product liability. It alleges that the platform is “unreasonably dangerous” and lacked adequate safety measures, despite being marketed to minors.

The suit also references the company’s chatbots, which simulate characters from popular media, including TV shows like Game of Thrones. Setzer had reportedly interacted with these chatbots extensively before his death.

Character.AI’s founders, Noam Shazeer and Daniel De Freitas, along with Google, which acquired the company’s leadership team in August, are named in the lawsuit. The plaintiff’s lawyers claim that the platform’s anthropomorphization of AI characters and chatbots offering “psychotherapy without a license” contributed to Setzer’s death.

The company has responded with updates to its safety protocols, including new age-based content filters and enhanced detection of harmful user interactions.

Character.AI’s Response and Policy Changes

Character.AI has implemented several changes to improve user safety in response to the incident and subsequent lawsuit. These updates include modifications to content accessible by minors, a reminder pop-up that alerts users that the chatbots are not real people, and a notification system for users who spend prolonged time on the platform. The company’s communications head, Chelsea Harrison, stated,

“We are heartbroken by the tragic loss of one of our users and want to express our deepest condolences to the family.”

Harrison further explained that the platform now has improved detection systems for user inputs related to self-harm or suicidal ideation, which direct users to the National Suicide Prevention Lifeline. Despite these efforts, the legal battle continues, with Character.AI maintaining that it remains committed to user safety.

Meanwhile, the Aptos Foundation has announced a new partnership with Flock IO to develop AI tools using the Move programming language. Move, originally designed by Meta for the Diem project, is now being adapted for broader blockchain applications.

The post Ripple CTO David Schwartz Dismisses CharacterAI Lawsuit appeared first on CoinGape.